1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

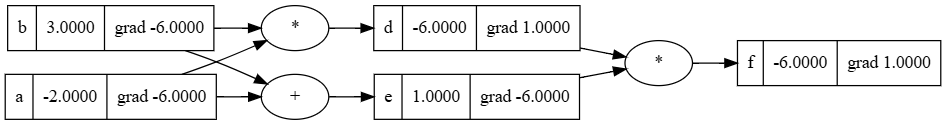

| import math

import random

from sklearn.tree import export_graphviz

from graphviz import Digraph

def trace(root):

nodes, edges = set(), set()

def build(v):

if v not in nodes:

nodes.add(v)

for child in v._prev:

edges.add((child, v))

build(child)

build(root)

return nodes, edges

def draw_dot(root):

dot = Digraph(format='svg', graph_attr={'rankdir': 'LR'})

nodes, edges = trace(root)

for n in nodes:

uid = str(id(n))

dot.node(name = uid, label = "{ %s | %.4f | grad %.4f }" % (n.label, n.data, n.grad), shape='record')

if n._op:

dot.node(name = uid + n._op, label = n._op)

dot.edge(uid + n._op, uid)

for n1, n2 in edges:

dot.edge(str(id(n1)), str(id(n2)) + n2._op)

dot.save('output.dot')

return dot

class Value:

def __init__(self, data, _children=(), _op='', label=''):

self.data = data

self.grad = 0.0

self._backward = lambda: None

self._prev = set(_children)

self._op = _op

self.label = label

def __repr__(self):

return f"Value(data={self.data})"

def __add__(self, other):

other = other if isinstance(other, Value) else Value(other)

out = Value(self.data + other.data, (self, other), '+')

def _backward():

self.grad += 1.0 * out.grad

other.grad += 1.0 * out.grad

out._backward = _backward

return out

def __mul__(self, other):

other = other if isinstance(other, Value) else Value(other)

out = Value(self.data * other.data, (self, other), '*')

def _backward():

self.grad += other.data * out.grad

other.grad += self.data * out.grad

out._backward = _backward

return out

def __pow__(self, other):

assert isinstance(other, (int, float)), "only supporting int/float powers for now"

out = Value(self.data**other, (self,), f'**{other}')

def _backward():

self.grad += other * (self.data ** (other - 1)) * out.grad

out._backward = _backward

return out

def __rmul__(self, other):

return self * other

def __truediv__(self, other):

return self * other**-1

def __neg__(self):

return self * -1

def __sub__(self, other):

return self + (-other)

def __radd__(self, other):

return self + other

def tanh(self):

x = self.data

t = (math.exp(2*x) - 1)/(math.exp(2*x) + 1)

out = Value(t, (self, ), 'tanh')

def _backward():

self.grad += (1 - t**2) * out.grad

out._backward = _backward

return out

def exp(self):

x = self.data

out = Value(math.exp(x), (self, ), 'exp')

def _backward():

self.grad += out.data * out.grad

out._backward = _backward

return out

def backward(self):

topo = []

visited = set()

def build_topo(v):

if v not in visited:

visited.add(v)

for child in v._prev:

build_topo(child)

topo.append(v)

build_topo(self)

self.grad = 1.0

for node in reversed(topo):

node._backward()

class Neuron:

def __init__(self, nin):

self.w = [Value(random.uniform(-1,1)) for _ in range(nin)]

self.b = Value(random.uniform(-1,1))

def __call__(self, x):

act = sum((wi*xi for wi,xi in zip(self.w,x)), self.b)

out = act.tanh()

return out

def parameters(self):

return self.w + [self.b]

class Layer:

def __init__(self, nin, nout):

self.neurons = [Neuron(nin) for _ in range(nout)]

def __call__(self, x):

outs = [n(x) for n in self.neurons]

return outs[0] if len(outs) == 1 else outs

def parameters(self):

return [p for neuron in self.neurons for p in neuron.parameters()]

class MLP:

def __init__(self, nin, nouts):

sz = [nin] + nouts

self.layers = [Layer(sz[i],sz[i+1]) for i in range(len(nouts))]

def __call__(self, x):

for layer in self.layers:

x = layer(x)

return x

def parameters(self):

return [p for layer in self.layers for p in layer.parameters()]

def main():

n = MLP(3, [4, 4, 1])

xs = [

[2.0, 3.0, -1.0],

[3.0, -1.0, 0.5],

[0.5, 1.0, 1.0],

[1.0, 1.0, -1.0],

]

ys = [1.0, -1.0, -1.0, 1.0]

for k in range(20):

ypred = [n(x) for x in xs]

loss = sum((yout - ygt)**2 for ygt, yout in zip(ys, ypred))

for p in n.parameters():

p.grad = 0.0

loss.backward()

for p in n.parameters():

p.data += -0.1 * p.grad

print(k, loss.data)

print(ypred)

main()

|